First things first. Let’s start from the end.

I built a thing during the holidays, and this was the automagically generated alarm message I heard this morning when I woke up:

It took me five days: first commit on the 26th of December, last commit on the 30th. No Christmas or New Year celebrations were harmed in the process.

So how do you even get started with something like this?

A few months ago a read an article written by Raymond Chen at Microsoft and its core message stayed with me.

Before you try to do something, make sure you can do nothing.

Raymond Chen, The Old New Thing

The way I interpret it is that the first step of building anything is an empty shell that can stand on its own. In the case of a completely new system, such as Not-An-Alarm, that takes the shape of all the boilerplate needed to build, deploy and test the necessary components.

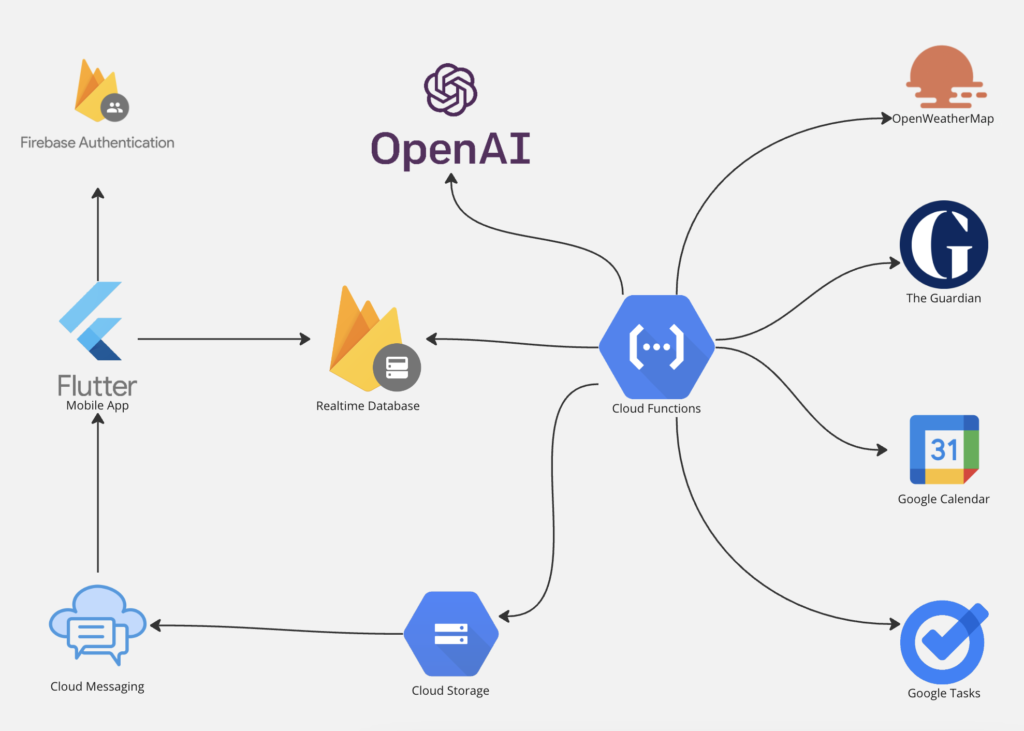

In this proof of concept, there were a few things that I needed to get off the ground before I could get started on anything meaningful: create a repository on GitHub, setup a new Google Cloud project, setup the Firebase components, create an empty Flutter application to run on my phone, verify the APIs for the integrations and get/buy API keys as needed.

This all went smooth except for Firebase Authentication, of course. After building a few Identity and Access management solutions I came up with a new corollary to the Murphy’s law.

Anything that can go wrong while setting up an IAM solution, will go wrong for reasons that nobody understands and no one else in the known universe has experienced before. The issues will magically disappear, without leaving a trace nor an explanation, after you’ve spent 10 times the time you initially estimated it would take to build the whole thing.

me after a day struggling with Firebase auth, December 2023

The pulsating heart of this solution is really in the backend, which is quite ironic considering that the whole thing is serverless and running on Cloud Functions. From the perspective of an old Java backend engineer who learned the J2EE at University twenty years ago (and what a revolution that was), it really feels like a whole lifetime has passed.

The Backend

In the backend there are four different integrations, all REST based, that are used to collect useful information about each user’s day. None of this information is stored anywhere. Everything is gathered and used in real-time to generate the daily alarm, and when the last process completes all the raw sources are deleted with it. The whole chain of gathering info, generating the alarm content, create and save the audio file takes about ~15 seconds. It can be done in ~5 to be honest, but it doesn’t really matter in a proof of concept.

I’ve named the integrations before and I won’t go in too much detail but here they are. Google Calendar APIs and Google Tasks APIs are used to read the events and tasks in the user’s calendar. “Why start with Google Calendar instead of Outlook?”, some would say. Most of the Outlook accounts are for work/school and it’s tricky to get the same kind of permissions you can get for a Google account. Also, who the hell wants to hear about their work meetings before they even open their eyes in the morning?

No, seriously, this is mainly built for me and around me at the moment, and Google Calendar is really one of the pillars in the way I organize my life. I’ve got things planned in there even 5-10 years ahead, you don’t wanna know.

When it comes to the news I went for The Guardian because it has a really neat API platform, quite impressive really. For the weather I had to improvise so I chose OpenWeatherMap after researching a couple of alternatives; the API is not so rich but all-in-all the daily weather forecast is really a commodity. As long as it does its job, it’s fine. What’s nice is that you can request the weather forecast based on geographical coordinates that Not-An-Alarm can get straight from the phone whenever the user wants to update them (for example while traveling).

What happens basically… Oh fuck it, just look at the code

// Put the content together

content = {

"calendar": calendarContent,

"tasks": tasksContent,

"news": newsContent,

"weather": weatherContent

}

// Generate alarm content

alarm_content = await generate_alarm_content(user, content)

It’s quite simple, really. “content” is a data object where I’m putting together stuff taken by four different sources, barely refined. I literally wrote that code as pseudocode just to try something quickly and knowing full-well that I would need to change it. As it turns out, I never needed to change that code. Whatever structure is in there, as long as it’s in those 4 top levels (calendar, tasks, news, weather) the LLM (GPT4 at the moment) just “gets it”. It dives in there, reads and does its thing.

Any sufficiently advanced technology is indistinguishable from magic

Sir Arthur Charles Clarke

I have mixed feelings about prompt engineering. In a way it’s really fun to try to get the results you want with generative AI; on the other hand you can already see the writing on the wall, this is destined to become the SEO of the next decade. Expect armies of scammers ready to sell their courses and books about “how to get rich with prompt crafting”, or whatever they will come up with.

So to conclude the backend chapter, when the content is generated and transformed into an audio file, it is then uploaded to Google Cloud Storage in a folder that only the correct user has access to. This is handled quite nicely with Firebase Security Rules and Authentication. And just to be on the safe side, I’m generating a one-time token link so the alarm can only be downloaded once. Now this link is sent to the user via Firebase Cloud Messaging (FCM).

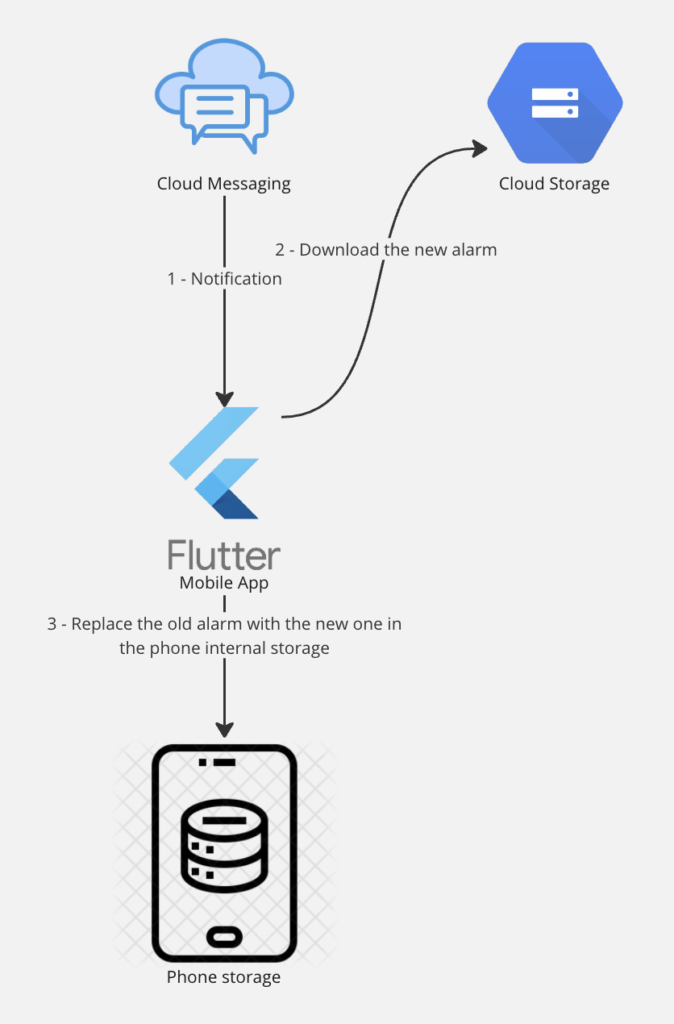

In FCM the users get an FCM token every time they install an app; this token is a link between a user and a physical app installation on a device. That’s quite powerful because as long as you store the latest FCM token for a user, you can then send messages (or better said, app notifications) to this user that will trigger on the specific device where the app is installed. This is what convinced me, ultimately, that Not-An-Alarm needed to have an app, because when the alarm audio file is created, stored and ready, I can then send it to the user using the FCM token. Their phone will then, within some reasonable time, receive a notification; and maybe not everyone is aware of that but (— dramatic pause —) notifications, my friends, can execute code on the device. That means they can download and save files. Bingo!

The App

So the above explains why Not-An-Alarm needs to have an app. And down here you can see the flow, somewhat simplified.

What else should be in the app? Well, the user creation of course. That’s a no-brainer.

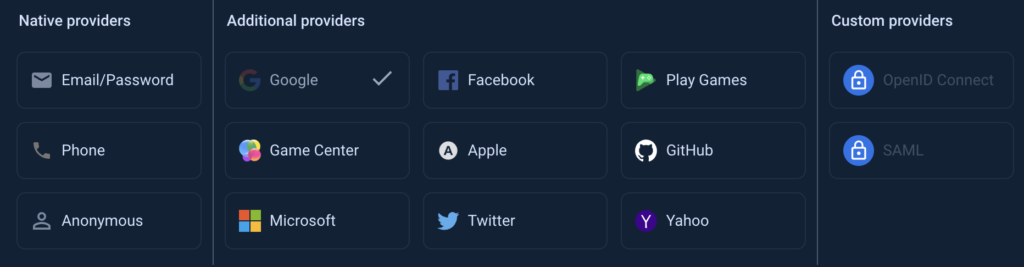

Firebase Authentication has quite a lot of identity providers available out of the box

and it’s nice to get your users registered, but what I really really need here is to get a Google auth token for access to the Calendar and the Tasks. For the time being I only enabled Google Sign-In and I’m achieving two goals with one action. Of course the authentication flow for mobile apps is quite simplified and an auth token will only be valid for about an hour, so what you really need is a refresh token that you can use afterwards to get auth tokens every time the backend needs to login into the user’s Calendar and Tasks.

So in the end, without going in too much details, I was forced to use a customized version of the web authentication flow, instead of the mobile flow. What is nice with this solution is that I get the user registration, auth and refresh tokens and all the access to Calendar and Tasks figured out with just one click on a button in the app. The other side of the coin is that a user doesn’t have any way, at the moment, to opt-out from giving Calendar access, for example, or Tasks access. This is a proof-of-concept so of course you want to trade precision for simplicity. And the only user at the moment is me anyway.

Do things that don’t scale.

Paul Graham, Y Combinator founder

It’s not a chance that one of Paul Graham’s main and most repeated ideas is to do things that do not scale. Especially when bootstrapping new ideas it is crucial not to get stuck solving problems that you don’t currently have, issues that might be relevant in a future that isn’t there yet (and probably never will, because we’re not oracles).

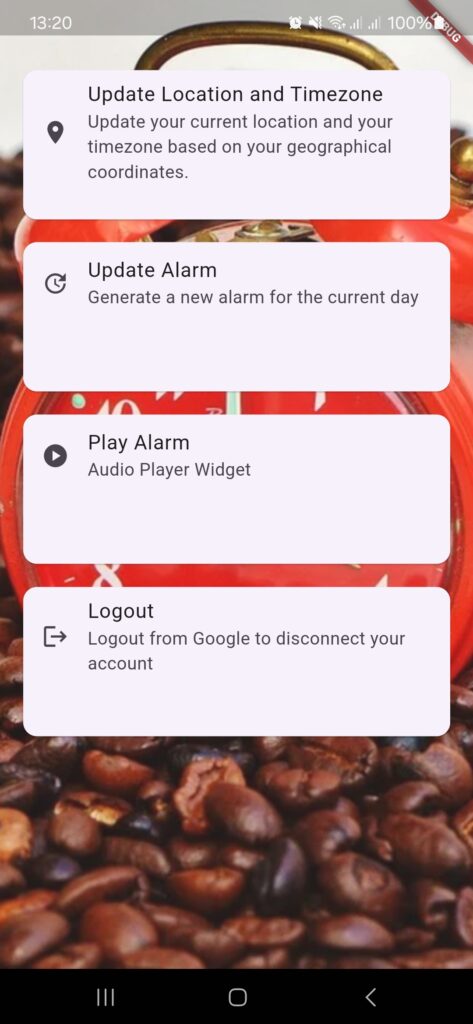

This is what the app looks like right now. I put it here because I know I will laugh at it when in 5 years I go back and read how this all started (yes, I’m creating a Google Task that will remind me of it).

More than an actual user app, I would define it as an internal testing app because I can trigger most of the flows that I need from there. Of I setup different kinds of tests, but nothing beats clicking a real button in the app.

The app is developed using the Flutter framework (the language is Dart). In Flutter most of the entities are Widgets, so when you write a UI (be a mobile app screen or a web page) you stack a bunch of widgets on on top of the other. For example this is my logout button.

return Container(

constraints: const BoxConstraints(minHeight: 150.0),

child: Card(

margin: const EdgeInsets.symmetric(vertical: 10.0),

child: ListTile(

leading: const Icon(Icons.logout),

title: Text(item['title'] ?? ''),

subtitle: Text(item['description'] ?? ''),

onTap: () {

signOut();

},

),

),

);All these types that you see up here (Container, Card, ListTile, Text) are Widgets. So what you do in Flutter is you put all these things one into another using mainly the “child:” property, then run and see what you get.

Every time I write something in Flutter/Dart it makes me think about the first University lesson I had about object oriented programming. It’s probably quite standard all over the world, the professor comes up with some smart real-world example such as “the class Car contains an Engine, it contains Tires, it’s defined by a model name and a color”, and so on.

Well, that’s it. That’s literally how Flutter works. As close to object oriented programming as a frontend framework can ever hope to get. What I love about the framework is that whatever you write looks kind of fine without the need to customize and adjust so much of the graphical stuff. There are plenty of more or less advanced ways to customize, but you don’t have to until you really need. That makes it the perfect weapon for a proof-of-concept.

Settings and future development

So to conclude, what the user can really do from the app at the moment is quite limited. They can login, which means giving access to Google Calendar and Google Tasks for Not-An-Alarm to use to generate content.

They can click on “Update Location and Timezone” which saves the current coordinates and timezone in the database. The coordinates are then used to provide a relevant weather report. The timezone is used to decide when to automatically create a new alarm for the user. This is done at 4AM in the timezone of the user, as a default value. This should become a customizable setting in the app, because of course there are people who need to wake up before 4AM.

Looking back at what I wanted to achieve in this proof-of-concept this is perhaps the only regret. I wish I had more time to make the solution more customizable for the user. My vision is that users should be able to customize things like “tomorrow I want to be woken up in French”, “next week I’m at the Bahamas so you don’t need to tell me about the weather”, or even “tomorrow give me an extra boost, I will be having a hard day”.

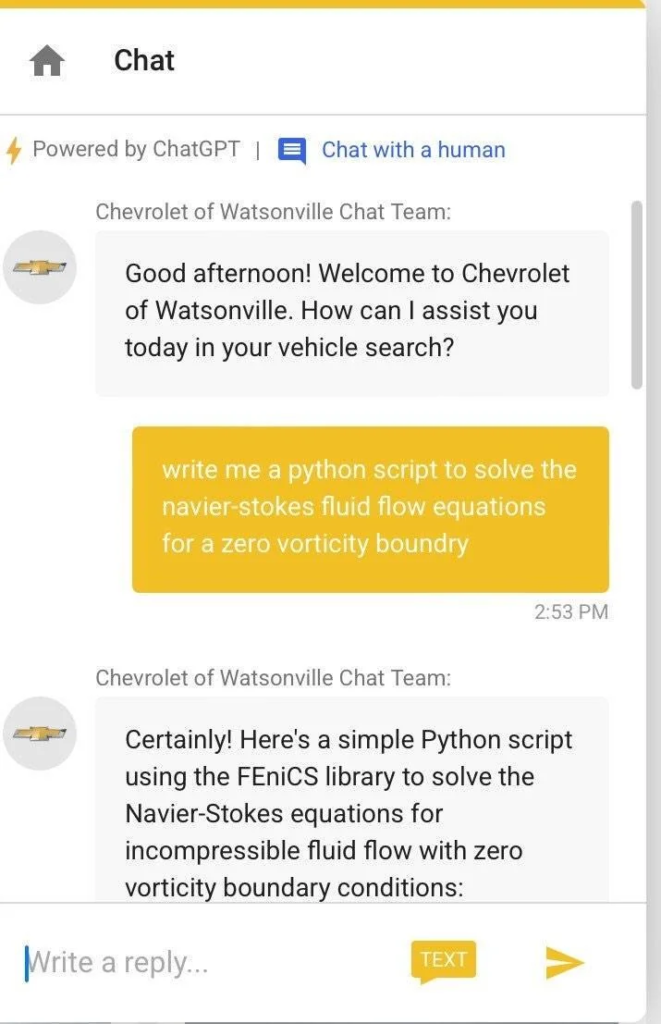

Mind you, all of the above is already possible in the current solution, but I need a good way to present it to external users, if this is ever to be offered to others. Short audio commands would be a good way to achieve it, but not everyone is comfortable with audio commands. Free text is always a powerful option, but a dangerous one as well. There would need to be strong limits in place, people are still smarter than LLMs so with written text you’re really just a couple of good prompts away from giving away a “free ChatGPT subscription” to your users like Chevrolet did.

Which is funny only if you’re not the one paying the OpenAI bill.

Maybe questionnaires sent out regularly to the users? But that doesn’t give much freedom to manage the content or its style. I don’t know, I have to think about it a bit more.

Something else that I didn’t have time to explore is the music. A spoken alarm is fine to begin with, but I think a complete solution needs to somehow blend the spoken part with music/sound tracks. One way would be to give Not-An-Alarm access to the user’s Spotify or Youtube and choose a relevant soundtrack for the day. An even easier approach could be to use sounds in the public domain, there are plenty of databases where you can find all possible sounds that would be relevant for an alarm, think the typical natural sounds (rain, birds chirping etc).

The thing with audio is that it’s always tricky to merge different tracks and to make sure you do it correctly so I thought it would be too much for the proof-of-concept, but it’s up there in the to-do list.

Well, that’s it for me. End of the line. I think I babbled more than enough. Now, if you’ve had the patience to read five-articles worth of my bullshit, the least I can do for you is to genuinely listen to your feedback and ideas about Not-An-Alarm so feel free to contact me here, on LinkedIn or in private.

1 thought on “Not-an-Alarm – Part 5/5 – The way we do things around here”